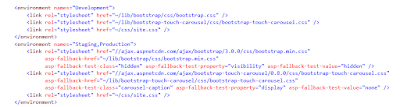

First, in the document head for our CSS (click on to enlarge and be able to read)

And then later for our scripts at the end of the body

What each of these code segments is doing is using the environment tag helper class to insert the appropriate CSS and JavaScript into the document respectively. In Development, all of the CSS and JavaScript will be served from the local web server. However, in Staging and Production, the third party libraries of Bootstrap, jQuery, hammer and the Bootstrap touch carousel will be served from the ASP.NET public CDN. This is what the URL's to the aspnetcdn.com domain are all about.

This project was created with the default template that ships with the release candidate of Visual Studio 2015. So things could change by the final release, but since this is a release candidate, one would think this code would closely resemble what will actually ship with the final version.

So what is a public CDN and when is it appropriate to use one? If you have read The Pragmatic Programmer, you will remember one of the tips in the book is that when code is generated by a wizard, you need to fully understand what that code does, because now that code is in your project and you are responsible for it. In the case, the code comes from the default template, but the principle is the same. We need to understand what a public CDN is and if this is really the right choice for our project to use.

What is a Public CDN

A public CDN is basically, it is a website that hosts popular CSS frameworks and JavaScript libraries that you can link to and use in your pages. Take for example a library like jQuery. We are accustomed to seeing a script tag like this:<script src="/scripts/external/jquery-1.11.3.min.js"></script>

When the browser processes this script tag, it will download jQuery from your web server.

However, with a public CDN, we are going to change the URL of our script tag to point at the location of the correct version of jQuery on the CDN server. So now we will have something that looks like this:

<script src="//ajax.aspnetcdn.com/ajax/jQuery/jquery-1.11.3.min.js"></script>

The difference is that when the browser encounters this script tag in our HTML document, it is not going to download jQuery from our web server, but from a Microsoft web server for the ASP.NET CDN.

Microsoft is not the only company to have a public CDN server that is available to be used. There are others, and I'm listed some of the most popular below. They vary in terms of what libraries each on has available, with Cloudflare having the most extensive collection at the time of writing:

- ASP.NET (Microsoft) - http://www.asp.net/ajax/cdn

- Google - https://developers.google.com/speed/libraries/

- cdnjs - https://cdnjs.com/

- jQuery - https://code.jquery.com/

Benefits From Using a Public CDN

There are some clear benefits to using a public CDN.- We have shifted the workload for serving these files from our web server to the CDN server, which saves us a little bit of load on our server and most importantly network bandwidth.

- Browsers limit the number of simultaneous connections they will make to a single web server. So by having some resources loaded from a CDN server, we are increasing the number of simultaneous resources that can be downloaded.

- Every major CDN provider has CDN servers distributed around the world. So this means the user will be downloading that copy of jQuery from a server that is relatively nearby, not one across the country or across the world. This helps to decrease network latency, the time it takes for packets to travel over the network from one place to another.

- All of the major CDN's will add the appropriate cache headers to their responses, so that any library loaded from the CDN will be cached for a year, which will save bandwidth on subsequent requests the user makes to the site.

- If multiple sites use all use the same library version on the same CDN, then this library will be cached by the browser when the user visits the first site. Then, when the user visits site 2 and sees the same URL for that library, it doesn't need to download the library because it already has the contents of that URL cached in the browser. In this way, the more sites that use a CDN the better, because there is a possibility that one of the common JavaScript libraries you use on your site is already cached in the browser due to the user requesting that exact same CDN URL from another site they have visited.

So in a nutshell, a CDN is a just a web server that hosts these popular libraries that you can then reference from your web pages, which saves your web server from having to serve these files yourself.

The first thing we need to understand is that we have now introduced an additional network server into our hosting picture, and this is a server that we do not control. It is true that CDN's are engineered for very high reliability, but as we have learned time and time again, every system will experience some down time. Google goes down. Microsoft goes down. Facebook goes down. Not very much, but it does happen. And in the case of a CDN going down, this means that your pages are probably not going to be functional because they are most likely dependent on the JavaScript you are loading.

In this blog post by Scott Hanselman, he describes a situation where the ASP.NET CDN server in the Northeastern United States went down for a period of time. The good news is that because CDN's are distributed around the globe, this failure only affected user's in the Northeastern United States. The bad news about this type of failure is that they are much harder to diagnose. Why does it work for the user in California and not for the user in Massachusetts? When these types of partial failures happen, they are difficult to diagnose.

So the bottom line is that there will be some small amount of down time if you use a public CDN. It won't be much, but making use of a public CDN means that there is another component in your system that needs to be up in order for your system to be up.

If you read the article, you will see some JavaScript code that will check to see if a library loaded and if not, server a local copy of the library to the browser. This code generally looks something like this:

If you search the web, you will find many similar code fragments. What is important though is to understand how a public CDN fails and how this code fragment responds. If a web server is down (and this includes a public CDN), usually you do not get a failure status back right away. Instead, the browser waits for the request to the server (the public CDN) to time out. Depending on the browser, it will wait between 20 and 120 seconds to timeout, and only then fallback to the local script.

One of the important rules about web performance is that the browser will not render any DOM elements that are below a script tag that has not finished loading. So if you have the code above in your document head, that means if the public CDN is unreachable that the user will be staring at a blank screen for 20 to 120 seconds before the fallback script fires and serves the local version of the script. Even if your script tags are at the bottom of your HTML body section as they should be, it means your page is probably missing major functionality for 20 - 120 seconds. The user of course will now know that the public CDN is not reachable, so to them, the page will appear partially (or perhaps completely) non-functional, and they may very well decide to leave in that period of time.

Again, the public CDN's are designed to be highly available, but the point is, if for any reason the CDN server becomes unreachable of the network, there are going to be serious consequences for your page. Network connectivity is something we tend to take for granted until we don't have it.

So what about the advantage of public CDN's allowing more files to be downloaded simultaneously due to resources being loaded from multiple servers. This is still an advantage. However, you can (and should) look at bundling together your CSS and JavaScript files, which has the effect of reducing the number of requests the browser has to make to download your page. So this is really just a different way of solving the problem.

Finally, what about the fact that if many sites on the web use the same library version from the same CDN, there is a probability that the user will already have the library cached when they visit your site. This is true that this can still happen, but in reality, there are so many different versions of a library like jQuery in use today, many different CDN's and many sites that still server the library themselves that in practice, the probability of the library from the CDN already being cached is very low.

What we are left with is that CDN's are geographically distributed around the world. If you are running a public site on the internet that receives hits from around the country or around the world, this is an important advantage. If most all of the traffic from your site comes from a local area, then this is not so important.

The point here is that if you implement good practices like caching the static content on your website and bundling and minifying your JavaScript, the advantages of using a public CDN are not nearly so great. So what we are left with then is evaluating these smaller advantages against the risk of the CDN being unreachable.

Likewise, if you are hosting a site that has bandwidth limits on the amount of data you serve, a public CDN again makes a lot of sense because you want to conserve every KB of bandwidth you can. And if you have a web site that is nearing capacity in terms of the load it serves, using a CDN can be a quick way to transfer some of the load to another server while you work on a longer term solution.

If you are developing an internally facing (intranet) web application, I think that using a public CDN is the wrong choice. Your internal web app probably accesses mostly other internal resources (think databases and web services within your firewall). If you add in a public CDN, you are now reliant upon a component outside of your firewall. If for any reason your data center experiences network connectivity issues with the internet, your internally facing app is now going to be impacted. This doesn't have to be the CDN going down. It could be your ISP having issues or even a component failing in your network room. In this scenario, I think all you are doing is adding risk by using a public CDN for these apps.

Plus, for an internal web application, you have a set of user's that is nearly constant, and most likely in only a couple of locations. So all of your CSS and JavaScript will already be cached in these user's browsers and we don't really have to solve the problem of first page views from different geographic locations. So in the internal application scenario, I don't think using a public CDN makes any sense.

There are clearly many other scenarios, and ultimately you will end up having to analyze for yourself if a public CDN makes sense or not for your application. This post should help you think through what some of the advantages are and if those advantages will really apply to you. If you have already implemented caching of your static content and bundling of your CSS and JavaScript, then any lift you get from a CDN is probably going to be on the smaller side. So what you have to do is balance this against the potential implications of if the CDN is unreachable for any reason. For cloud hosted apps or apps that server users across a wide geographic region, this tradeoff is probably worth making. In other cases though. the potential gain is so small that you will probably be better off just serving these files on your own.

Is There a Downside?

When I first learned about public CDN's, I felt there was literally no reason not to use a public CDN for every website that I worked on. As I have learned more and carefully studied the issues related to web performance, my enthusiasm for public CDN's is much more measured, and I'll describe why below.The first thing we need to understand is that we have now introduced an additional network server into our hosting picture, and this is a server that we do not control. It is true that CDN's are engineered for very high reliability, but as we have learned time and time again, every system will experience some down time. Google goes down. Microsoft goes down. Facebook goes down. Not very much, but it does happen. And in the case of a CDN going down, this means that your pages are probably not going to be functional because they are most likely dependent on the JavaScript you are loading.

In this blog post by Scott Hanselman, he describes a situation where the ASP.NET CDN server in the Northeastern United States went down for a period of time. The good news is that because CDN's are distributed around the globe, this failure only affected user's in the Northeastern United States. The bad news about this type of failure is that they are much harder to diagnose. Why does it work for the user in California and not for the user in Massachusetts? When these types of partial failures happen, they are difficult to diagnose.

So the bottom line is that there will be some small amount of down time if you use a public CDN. It won't be much, but making use of a public CDN means that there is another component in your system that needs to be up in order for your system to be up.

If you read the article, you will see some JavaScript code that will check to see if a library loaded and if not, server a local copy of the library to the browser. This code generally looks something like this:

<script src="http://ajax.aspnetcdn.com/ajax/jquery/jquery-2.0.0.min.js"></script>

<script>

if (typeof jQuery == 'undefined') {

document.write(unescape("%3Cscript src='/js/jquery-2.0.0.min.js' type='text/javascript'%3E%3C/script%3E"));

}

</script>

If you search the web, you will find many similar code fragments. What is important though is to understand how a public CDN fails and how this code fragment responds. If a web server is down (and this includes a public CDN), usually you do not get a failure status back right away. Instead, the browser waits for the request to the server (the public CDN) to time out. Depending on the browser, it will wait between 20 and 120 seconds to timeout, and only then fallback to the local script.

One of the important rules about web performance is that the browser will not render any DOM elements that are below a script tag that has not finished loading. So if you have the code above in your document head, that means if the public CDN is unreachable that the user will be staring at a blank screen for 20 to 120 seconds before the fallback script fires and serves the local version of the script. Even if your script tags are at the bottom of your HTML body section as they should be, it means your page is probably missing major functionality for 20 - 120 seconds. The user of course will now know that the public CDN is not reachable, so to them, the page will appear partially (or perhaps completely) non-functional, and they may very well decide to leave in that period of time.

Again, the public CDN's are designed to be highly available, but the point is, if for any reason the CDN server becomes unreachable of the network, there are going to be serious consequences for your page. Network connectivity is something we tend to take for granted until we don't have it.

Re-evaluating the Benefits

The main benefits we outlined above was that we save our web server from needing to serve these common CSS frameworks and JavaScript libraries, which ultimately saves load and bandwidth on our web server. But here is another important point to consider. CSS and JavaScript files are static files and as such, we should be setting the caching headers in the responses for these files such that the only time they have to be loaded is on the very first page view they make on our site. After this, on every other page the user browses to, the browser will issue a conditional request for these resources of which the web server will send back an HTTP 304 response (Resource Not Changed) which is only a single packet. So using a public CDN really means that we are going to have some savings on the very first page the user visit's on our site, but after that, the savings are basically negligible.So what about the advantage of public CDN's allowing more files to be downloaded simultaneously due to resources being loaded from multiple servers. This is still an advantage. However, you can (and should) look at bundling together your CSS and JavaScript files, which has the effect of reducing the number of requests the browser has to make to download your page. So this is really just a different way of solving the problem.

Finally, what about the fact that if many sites on the web use the same library version from the same CDN, there is a probability that the user will already have the library cached when they visit your site. This is true that this can still happen, but in reality, there are so many different versions of a library like jQuery in use today, many different CDN's and many sites that still server the library themselves that in practice, the probability of the library from the CDN already being cached is very low.

What we are left with is that CDN's are geographically distributed around the world. If you are running a public site on the internet that receives hits from around the country or around the world, this is an important advantage. If most all of the traffic from your site comes from a local area, then this is not so important.

The point here is that if you implement good practices like caching the static content on your website and bundling and minifying your JavaScript, the advantages of using a public CDN are not nearly so great. So what we are left with then is evaluating these smaller advantages against the risk of the CDN being unreachable.

So When Is a Public CDN the Right Choice

If you are running a public website, especially a website that is hosted in the cloud, and you have visitors from across the country or across the world, then I think a public CDN makes sense. You will get some lift from the geographic distribution that public CDN's offer. You also have to consider that in many cloud hosting scenarios, you are charged based on the bandwidth that you use, so any amount of bandwidth you can off load is important.Likewise, if you are hosting a site that has bandwidth limits on the amount of data you serve, a public CDN again makes a lot of sense because you want to conserve every KB of bandwidth you can. And if you have a web site that is nearing capacity in terms of the load it serves, using a CDN can be a quick way to transfer some of the load to another server while you work on a longer term solution.

If you are developing an internally facing (intranet) web application, I think that using a public CDN is the wrong choice. Your internal web app probably accesses mostly other internal resources (think databases and web services within your firewall). If you add in a public CDN, you are now reliant upon a component outside of your firewall. If for any reason your data center experiences network connectivity issues with the internet, your internally facing app is now going to be impacted. This doesn't have to be the CDN going down. It could be your ISP having issues or even a component failing in your network room. In this scenario, I think all you are doing is adding risk by using a public CDN for these apps.

Plus, for an internal web application, you have a set of user's that is nearly constant, and most likely in only a couple of locations. So all of your CSS and JavaScript will already be cached in these user's browsers and we don't really have to solve the problem of first page views from different geographic locations. So in the internal application scenario, I don't think using a public CDN makes any sense.

There are clearly many other scenarios, and ultimately you will end up having to analyze for yourself if a public CDN makes sense or not for your application. This post should help you think through what some of the advantages are and if those advantages will really apply to you. If you have already implemented caching of your static content and bundling of your CSS and JavaScript, then any lift you get from a CDN is probably going to be on the smaller side. So what you have to do is balance this against the potential implications of if the CDN is unreachable for any reason. For cloud hosted apps or apps that server users across a wide geographic region, this tradeoff is probably worth making. In other cases though. the potential gain is so small that you will probably be better off just serving these files on your own.